Hearing, sound and audio for immersion

The importance of sound

Hearing (or audio perception) is the process of physical vibrations being transferred to and picked up by the nervous system, which in turn are experienced by the brain as sounds. The sound information received in the brain is interpreted using pattern recognition, in this way a great deal can be understood about one’s environment, as well as using this system for receiving verbal communication.

Hearing and vision have played important roles in media through the 20th and into the 21st centuries due to the popularity and ubiquity of cinema and television. When I ask people to describe films they’ve watched they generally convey the story and narrative, and perhaps describe some visuals- frames and sequences. Its less common for people to recall and comment on the sounds in a film. This is because well crafted sound tends to bypass the conscious mind, resulting in a more direct influence on the audience’s emotions. However using sounds out of place, or poorly mixed will distract the audience, reducing immersion. Due to this, sound can be used by content creators to greatly enhance one’s feeling of immersion.

The importance of sound on an audience’s experience cannot be understated. Even so called silent movies were not truly silent- almost always featuring live musicians.

“audiences seem to be more annoyed by poor sound quality than by poor, set, costume, make-up and cinematography”

Akam 2018

The effects of sound in film are just as relevant to immersive media such as virtual reality, as well having the benefit of providing an enhanced perception of sound source direction, further increasing immersion.

Creators can use sound to their advantage to increase the impact or realism of an experence, as well as for setting mood and atmosphere for moments and scenes within a piece.

3D audio for immersion

To further deepen the immersion of a viewer in a virtual world, 3D audio can be used. 3D audio is enabled by digital signal processing which allows for virtual placement of sounds within a 3D space in relation to the position of the viewer/listener.

In the real world sound waves are transmitted through substances and reflected off surfaces in highly complex ways which can be analysed and modelled using physics.

An example of a real sound source is a marble dropping on a stone floor at the far end of a corridor. The frequencies within the sound are primarily determined by the size of the marble and the dimensions of the floor. Part of the sound waves travel directly to the ears of the listener down the corridor, while others travel to other surfaces where they will be reflected and so on. Each of the listeners ears will hear different sounds due to their different positions. Some sound may pass through the head before reaching the ear drums, further altering the waves. All of this occurs over time… sound travels through different substances at different rates- for example an average of around 330 meters per second through air. Our brains interpret these highly complex signals over time which can be used to help understand much about the environment and the sound sources.

How a sound is altered as it travels from its source to one’s ear drums can be modelled in an algorithm. This algorithm can be used to alter audio signals to mimic the natural waves. The algorithms used to achieve 3D sound are head-related transfer function filters and cross talk cancellation techniques.

In a virtual reality experience the user’s live head position data feed can be used to alter 3D audio algorithms in realtime in order to provide an experience where sounds can virtually move in relation to the users movements. The maximum latency should be less than a few dozen milliseconds for a user to not perceive the lag as this reduces immersion and presence.

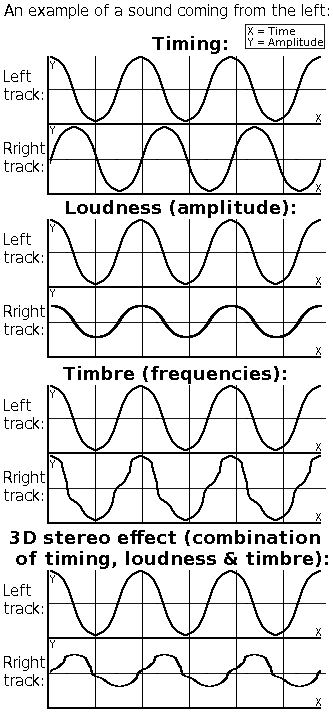

Traditional sound formats were mono and then stereo. Stereo uses two discreet channels of audio which can be sent to separate left and right speakers with the ability to pan sounds from one to another for some spatial control. Surround sound extends this concept by using more speakers surrounding the listener.

There are several surround sound formats, one example being ambisonics which unlike other surround formats doesn’t include discreet channels of audio, but instead contains a sound field format called B-format which is decoded to the listeners speaker setup which can differ between users. The ambisonics format was adopted by YouTube and Facebook for 360° content due to its low computation cost for rotations. Facebook has published this guide along with a free audio plugin Spatial Workstation for producing 360 sound. Google also has a guide and a plugin here.

Summary

Hearing is a vital element for immersion. Technologies are now so advanced that artificial sounds can very closely replicate reality- with often indistinguishable results. Of all the senses sound and hearing is the one which we can most acurately recreate. For this reason it is a highly effective focus for immersion and storytelling.

This subject area is extremely extensive and generally very well understood- including acoustics, psychoacoustics, psychology, computing, engineering and more. I may well revisit this area in the future to look more closely at one or more of these aspects. I feel that sound design should be given high priority from the outset of an immersive project.

Read my previous article on vision here.

References

Arnaldi, Guitton & Moreau 2018. Virtual Reality and Augmented Reality: Myths and Realities. Bruno Arnaldi (Editor), Pascal Guitton (Editor), Guillaume Moreau (Editor). ISBN: 978-1-786-30105-5 March 2018 Wiley-ISTE

Rumsey, Francis (2001). Spatial Audio. Focal Press. pp. 62–64. ISBN 0-240-51623-0.

Akam 2018. The indispensability of sound in film production: psychological effect on the audience and challenges to the film director. Kingsley Oyong Akam. www.ijriar.com

Credits

Featured image: Morten Bisgaard, Public domain, via Wikimedia Commons